GRADE: Generating Realistic Animated Dynamic Environments

Elia Bonetto1,2,* Chenghao Xu1,3 Aamir Ahmad2,1

1Max Planck Institute for Intelligent Systems 2University of Stuttgart 3Delft University of Technology

* Corresponding Author

Projects and papers Data License Contact

Code (Isaac Sim)Code (Animated SMPL to USD)Code (3DFront to USD) Code (Tools and evaluation)

Introduction

With GRADE we provide a pipeline to easily simulate robots and collect data in photorealistic dynamic environments. This will simplify various research in robotics and not by a great extent by allowing easy data generation and precise custom simulations. The project is based on Isaac Sim, Omniverse and the open source USD format.

On our first work, we put the focus into four main components: i) indoor environments, ii) dynamic assets, iii) robot/camera setup and control, iv) simulation control, data processing and extra tools. Using our framework we generate an indoor dynamic environment dataset, and showcase various scenarios such as heterogeneous multi-robot setup and outdoor video capturing. This has been used to extensive test various SLAM libraries and synthetic data usability in human detection/segmentation tasks.

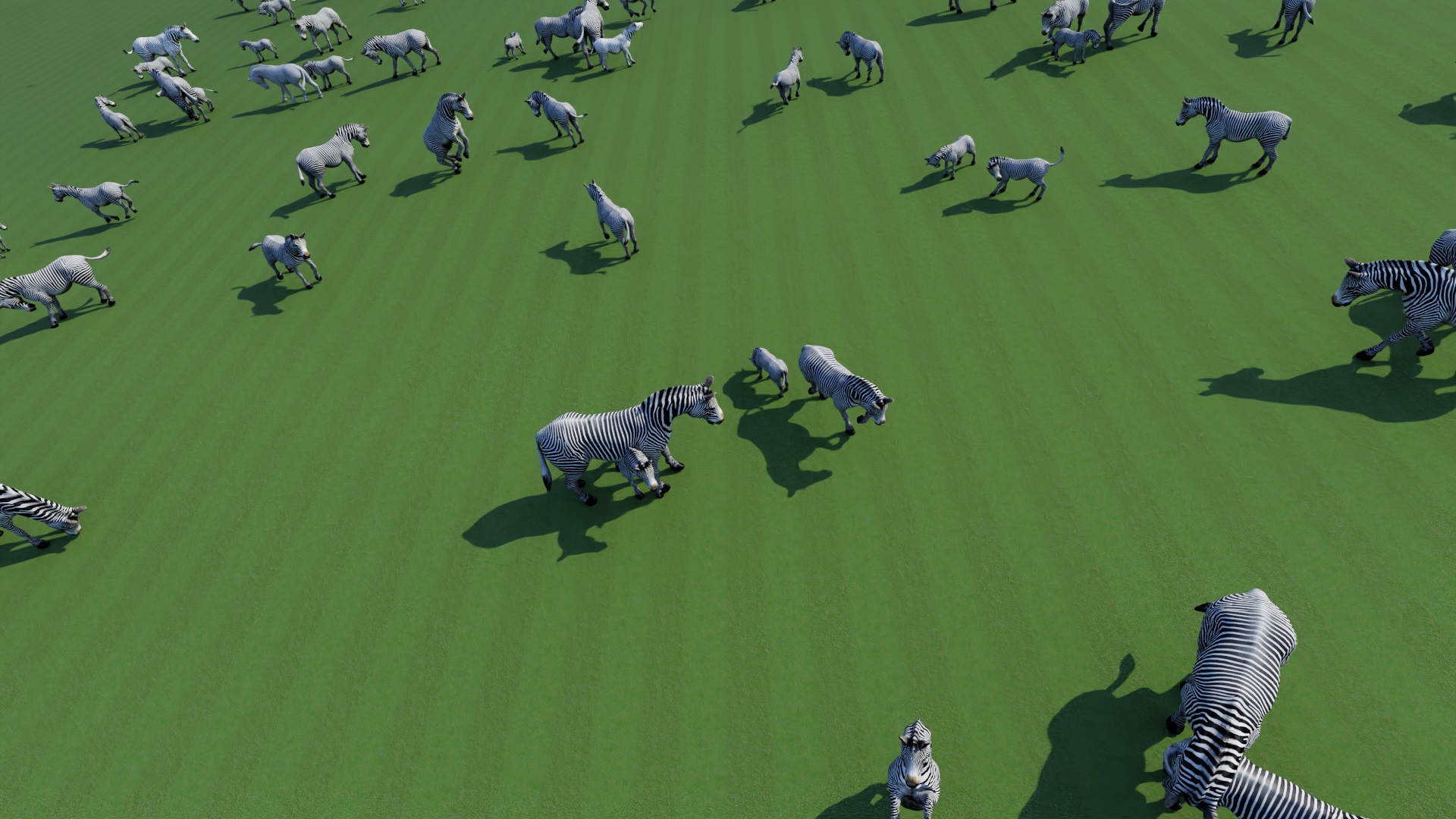

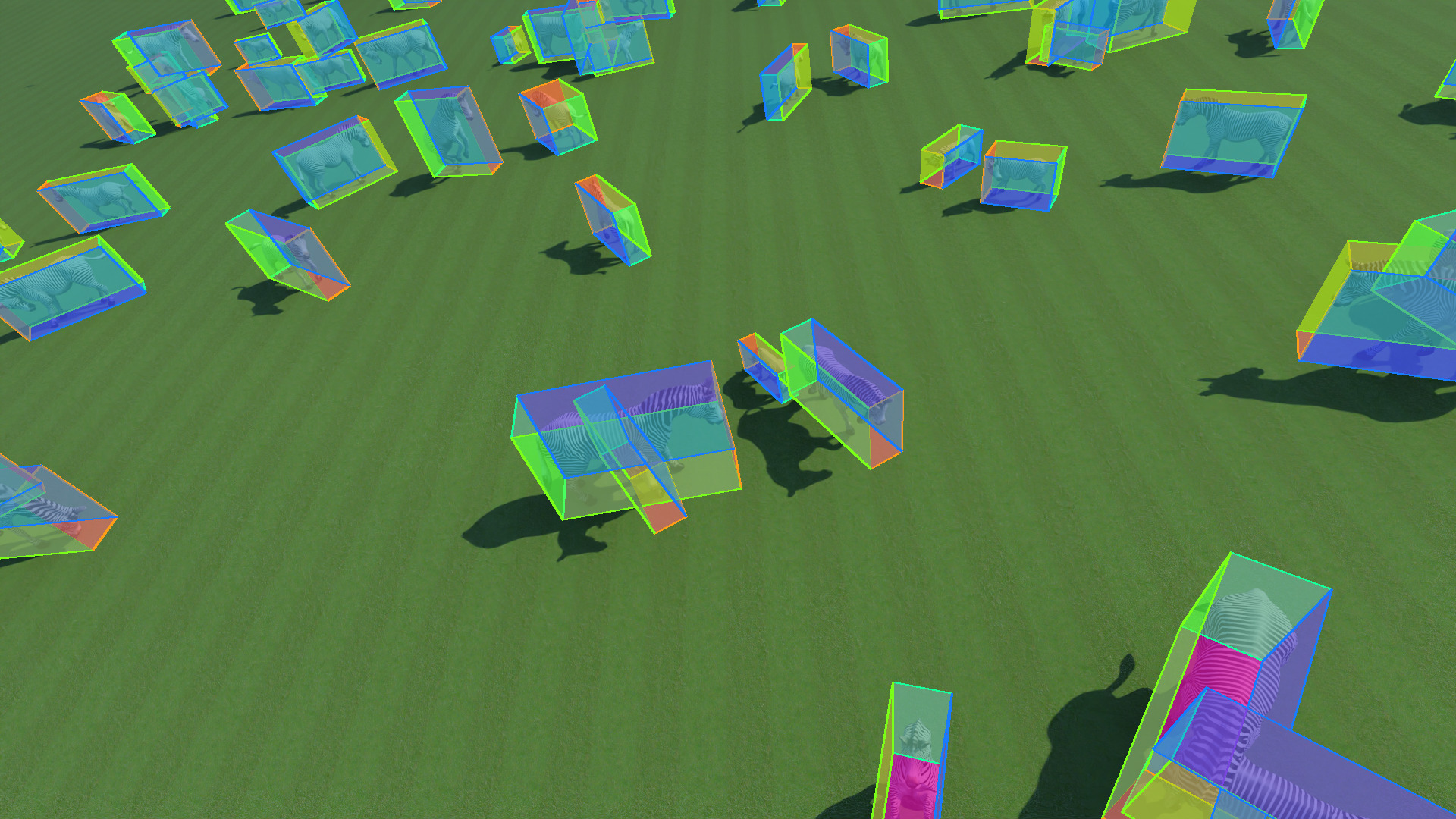

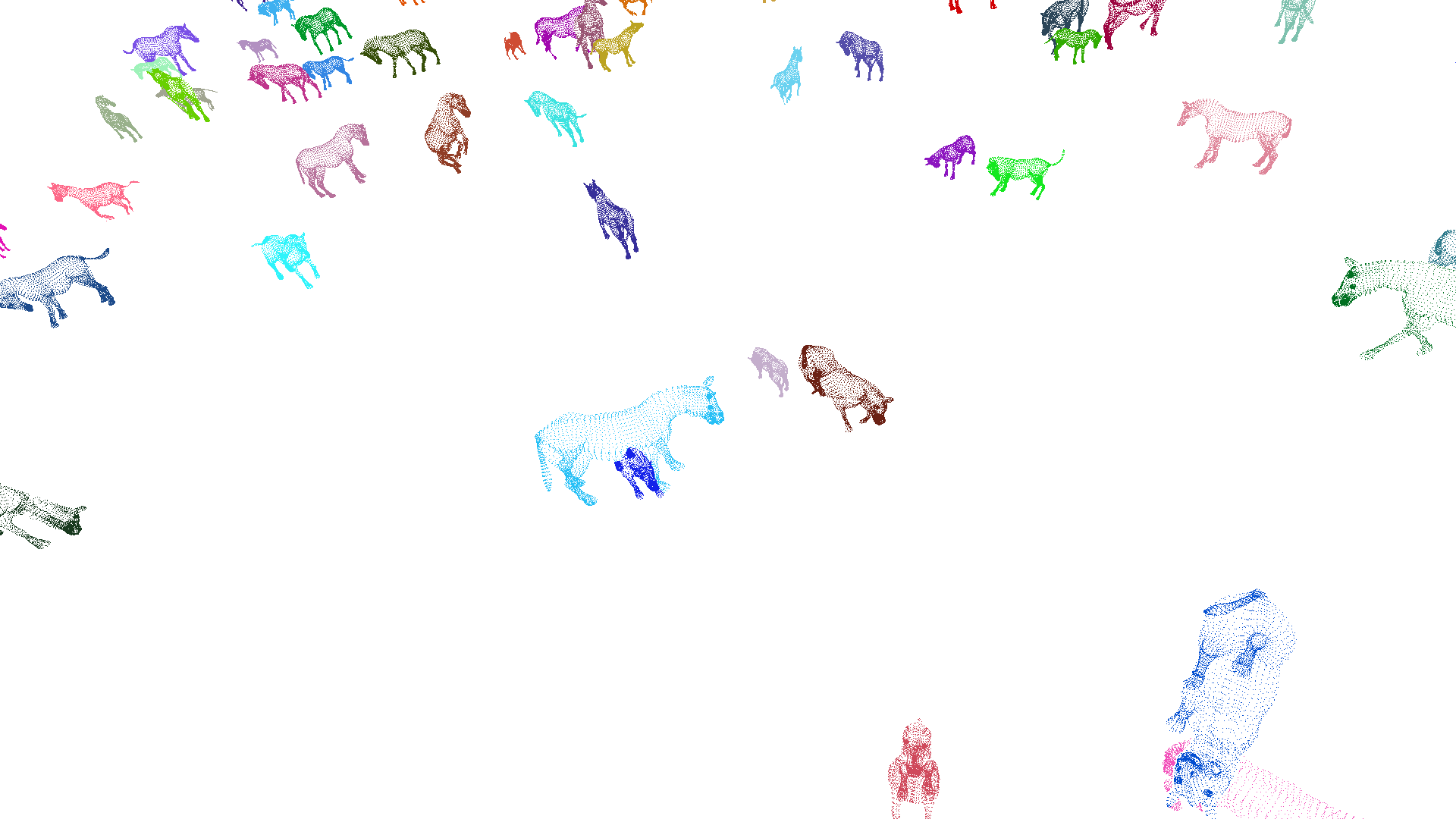

Then, we used the very same framework to generate a dataset of zebras captured outdoor from aerial views, and demonstrated that we can train a detector without using real world images achieving 94% mAP.

The system is thought to be modular and adaptable to everyone needs. It is not a closed solution that does only a single thing or that can be used in a single way. For example, the use of ROS is neither necessary nor mandatory, the camera can be either controlled by the physics engine or teleported, and much more.

Additional information can be found here, in our papers, or in our main repository.

Projects and Papers

GRADE

We use the framework in conjunction with our animation converter, environment exporter and control framework (which can control virtually anything thanks to our expandable custom 6DOF joint controller) to test various robotics scenarios and develop a streamlined pipeline to test robots and generate ground-truth data. Those are, for example, multi-robot settings, active SLAM with three wheeled omnidirectional robots, indoor autonomous exploration with drones, and experiment repetition with sub-millimeter precision. We demonstrate how the system can be used to test both on-line and off-line robotics systems.

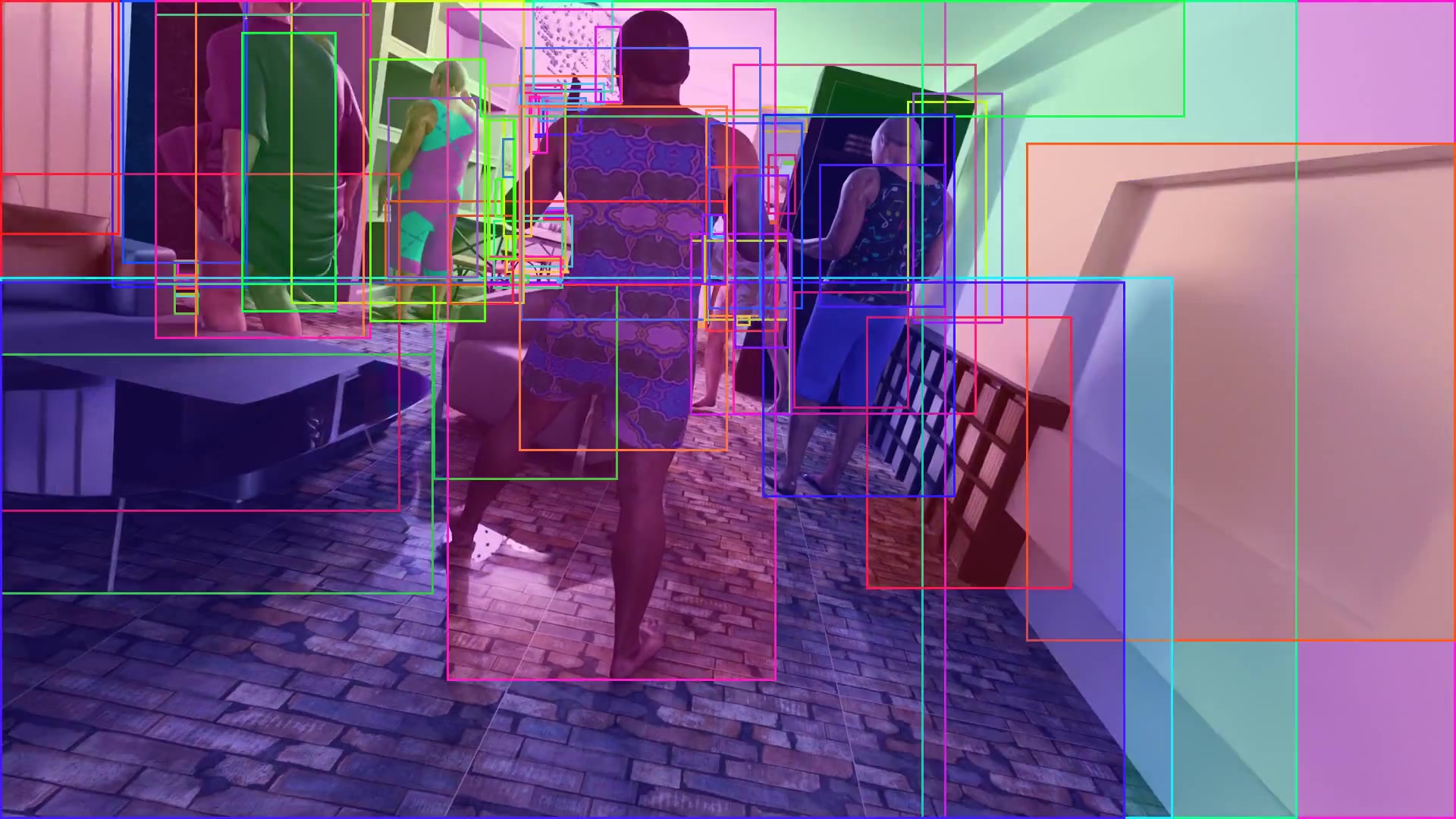

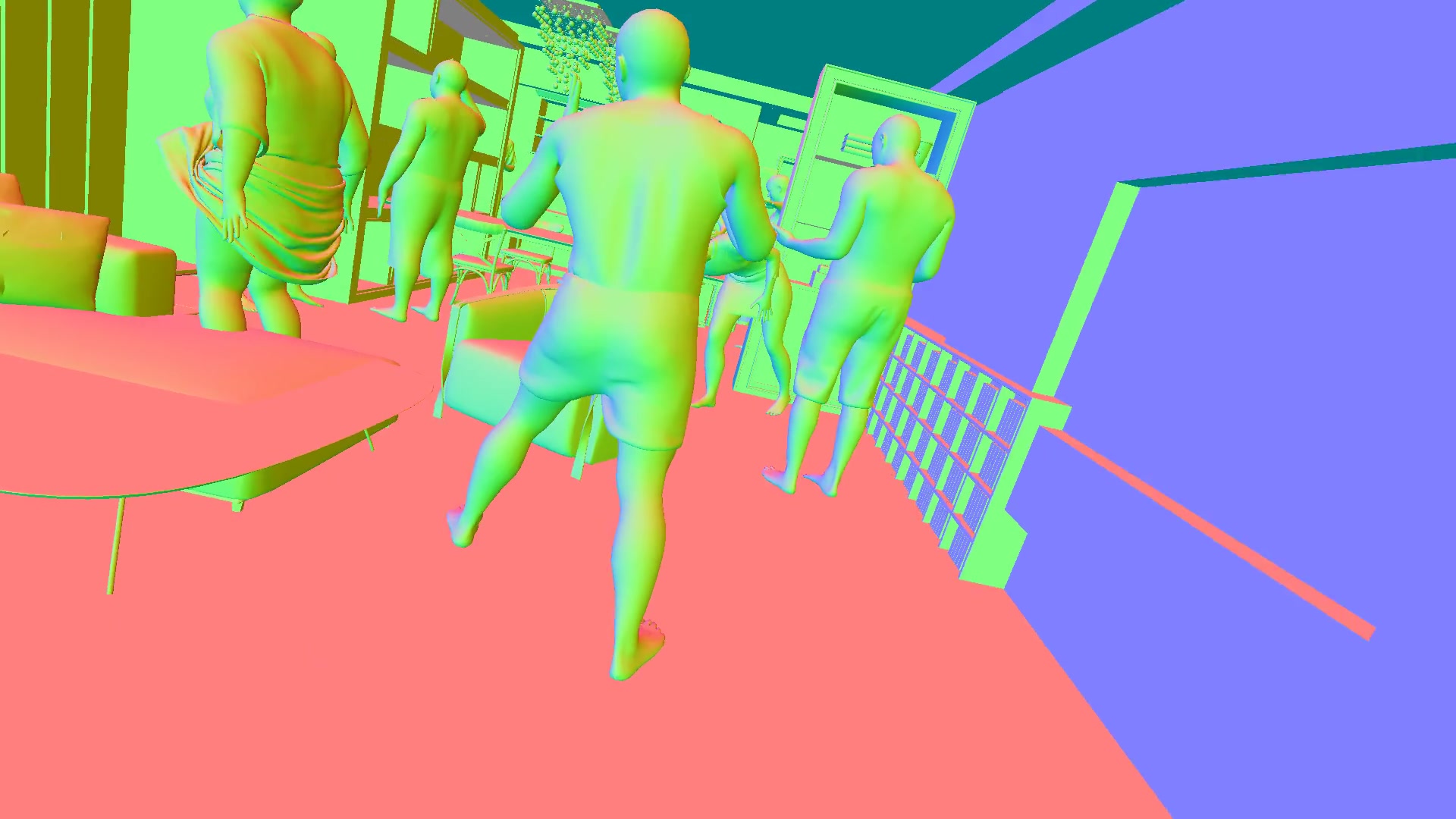

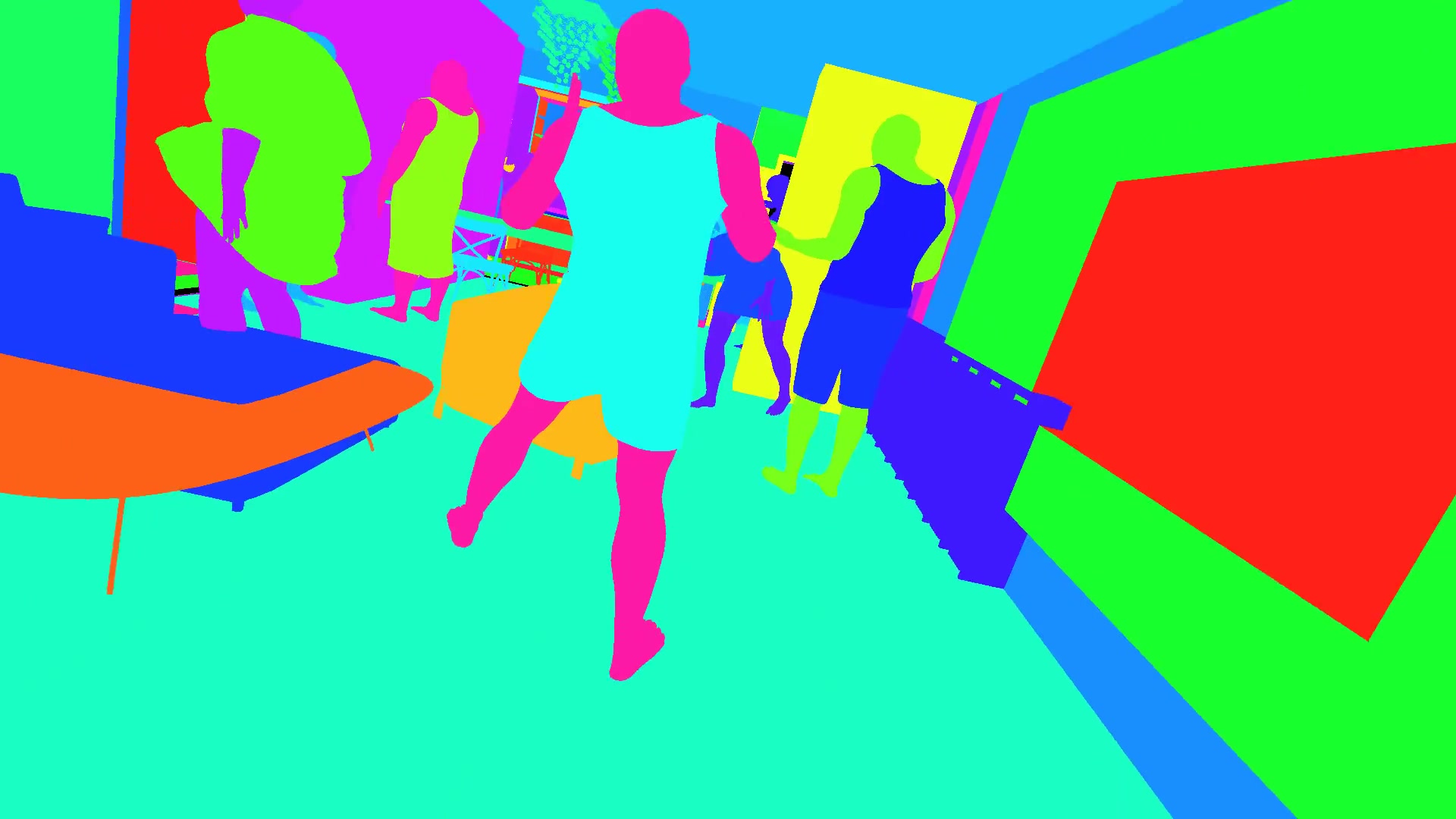

In particular, we generate an indoor dynamic environments dataset by autonomously exploring a set of worlds taken from the 3DFront dataset, with animated humans automatically placed to avoid collisions, and flying objects. The data generate comprise depth, instance segmentation, bounding boxes and much more. We post-process the generated data, e.g. by adding noise, to evaluate several Dynamic SLAM libraries and used test the sim-to-real capabilities on the human detection and segmentation tasks. We did that by using our extensive set of tools.

With those test we showed how many of these SLAM methods cannot recover from failures, and have highly degraded performance in dynamic environments even during very short sequences (60 seconds). Moreover, we successfully show how the data can be used to detect humans successfully in the real world.

- GRADE: Generating Realistic Animated Dynamic Environments for Robotics Research, Bonetto et al., 2023

- Learning from synthetic data generated with GRADE, ICRA2023 Workshop on Pretraining for Robotics (PT4R), Bonetto et al., 2023 (OpenReview)

- Simulation of Dynamic Environments for SLAM, ICRA2023 Workshop on Active Methods in Autonomous Navigation, Bonetto et al., 2023

Synthetic Zebras

In this project we used the system as a data generation tool, obtaining an outdoor synthetic Zebra datasets observed from aerial views. We demonstrated that generating synthetic data using GRADE can give us visual-realistic information that can be used directly to train detection models that will work on real-world images without any fine-tuning or usage of labelled real-world data.

We did that by using a variety of environments from Unreal Engine, a freely available zebra model, and without using physics, ROS, or any other robotics-related component.

- Synthetic Data-based Detection of Zebras in Drone Imagery, IEEE European Conference on Mobile Robots 2023, Bonetto et al., 2023.

Contact

Questions: grade@tue.mpg.de

Licensing: ps-licensing@tue.mpg.de